|

Journal of Science Policy & Governance | Volume 16, Issue 01 | April 13, 2020

|

Policy Memo: Conducting Article 36 Legal Reviews for Lethal Autonomous Weapons

|

Jared M. Cochrane

United States Military Academy, Department of Physics and Nuclear Engineering, West Point, NY |

Keywords: artificial intelligence (AI); lethal autonomous weapons (LAWs); Article 36; Geneva Conventions; meaningful human control

Executive Summary: The rapid development of AI’s capabilities and adaptability over the past decade has revolutionized the nature of modern warfare by making fully autonomous weapons a near-term reality. This paper describes Article 36 of Additional Protocol 1 to the Geneva Conventions—which governs Lethal Autonomous Weapons (LAWs) testing—and proposes the addition of two sections to current U.S. LAWs testing policy to facilitate standardized LAWs testing on an international scale. Given AI’s burgeoning capabilities in military weapons, standardized LAWs testing procedures are critical to ensuring that future LAWs conform to international military law. Because Article 36 fails to specify a standardized LAWs testing methodology, the U.S. should work to standardize its domestic LAWs review process by modifying Department of Defense (DoD) Instruction 5500.15. The majority of nations that are developing autonomous weapons aspire for standardized LAWs testing procedures but lack an example to help guide them (Boulanin 2015). By working to standardize its LAWs testing procedures, the U.S. could provide an example for other nations to follow. In this regard, the example set by U.S. domestic policy can help, even if power competitors like Russia and China are unlikely to follow from a deep-seated desire to challenge U.S. LAWs supremacy.

I. Introduction and Background

Lethal Autonomous Weapons (LAWs) are defined by the Congressional Research Service as “weapon systems that use sensor suites and computer algorithms to independently identify a target and employ an onboard weapon system to engage and destroy the target” (Congressional Research Service 2019, 1). Whereas conventional weapons require the direct action of a human operator to function, LAWs are unique in their ability to complete tasks on their own, without any human input required. LAWs’ increasing prevalence on an international scale during the past decade has raised concerns among numerous governments, scholars, and engineers regarding the ethical, legal, and social implications of such technology (Backstrom and Henderson 2012).

During November 2014, the United Nations Convention on Certain Conventional Weapons (CCW) conducted the first international meeting to address the development of LAWs (United Nations Convention on CCW 2014). The committee concluded that the moral challenges posed by LAWs necessitated “the implementation of weapons reviews” to ensure that emerging LAWs technology conformed to international law—specifically Articles 35 and 36 of Additional Protocol 1 to the Geneva Conventions (United Nations Convention on CCW 2014). These articles prohibit the decision to “employ weapons, projectiles and material and methods of warfare of a nature to cause superfluous injury or unnecessary suffering”, to combatants, innocents, or “the natural environment” (Lawan 2006, 13).

During November 2014, the United Nations Convention on Certain Conventional Weapons (CCW) conducted the first international meeting to address the development of LAWs (United Nations Convention on CCW 2014). The committee concluded that the moral challenges posed by LAWs necessitated “the implementation of weapons reviews” to ensure that emerging LAWs technology conformed to international law—specifically Articles 35 and 36 of Additional Protocol 1 to the Geneva Conventions (United Nations Convention on CCW 2014). These articles prohibit the decision to “employ weapons, projectiles and material and methods of warfare of a nature to cause superfluous injury or unnecessary suffering”, to combatants, innocents, or “the natural environment” (Lawan 2006, 13).

i. Current laws testing issues

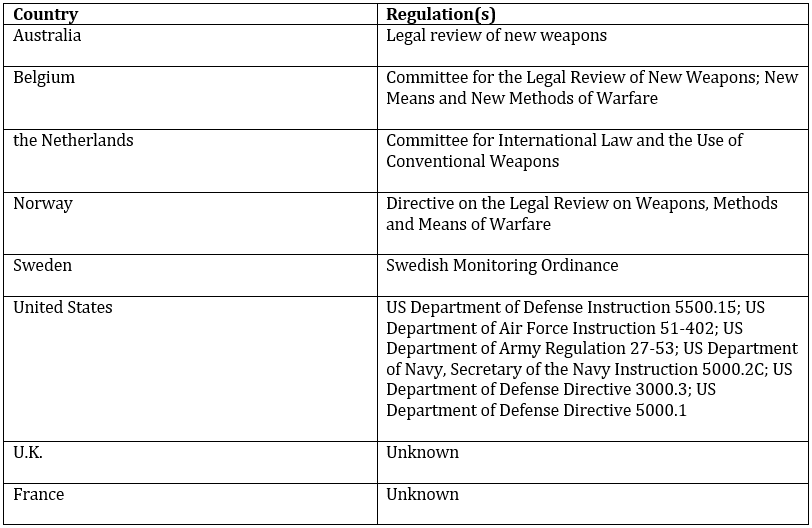

In 2014, the CCW Convention discovered a major flaw in the current LAWs legal review process, that being the absence of clear guidance that outlines how legal tests should be conducted and enforced (Boulanin 2015). Of the 174 nations that have signed Articles 35 and 36, only eight have LAWs legal review mechanisms in place to ensure conformity to these articles: Australia, Belgium, the Netherlands, Norway, Sweden, the United States, the U.K., and France (Lawan 2006). As stated by the Stockholm International Peace Research Institute, “Article 36 reviews…are national procedures beyond any kind of international oversight, and there are no established standards with regard to how they should be conducted” (Boulanin 2015, 2). As a world leader in the development of autonomous weapons, it is imperative that the U.S. play a leading role to ensure that international law guides future LAWs’ development.

In order to play an international role in LAWs testing standardization, the U.S. Department of Defense (DoD) needs to develop and refine its domestic Article 36 legal review procedures. The U.S. has several regulations that guide Article 36 LAWs reviews (Congressional Research Service 2019). DoD Instruction 5500.15 establishes broad guidelines to ensure that newly developed weapons conform to international law (Lawan 2006). However, these guidelines do not outline standardized legal review procedures specific to LAWs, which pose unique challenges concerning moral responsibility and compliance with international law. Major Guetlein, from the U.S. Naval War College, explains that when “the trigger puller is a machine”, the ethical accountability for an action’s outcome is much more difficult to determine than in the case of conventional weapons since LAWs can “engage targets autonomously without a man-in-the-loop” (Guetlein 2005, 12).

In order to address the lack of standardization in LAWs testing, this article suggests that two principles be added to DoD Instruction 5500.15. First, it should be stated that the battlefield commander bears sole responsibility for LAWs’ actions in combat. Because commanders are morally responsible for the employment of conventional weapons, they should also bear moral responsibility for the weapons in their unit that act autonomously. Second, the standard of meaningful human control, as framed by U.S. Air Force Lieutenant Colonel Adam Cook, should be integrated as a common metric to gauge the compatibility of LAWs with international military law.

In order to play an international role in LAWs testing standardization, the U.S. Department of Defense (DoD) needs to develop and refine its domestic Article 36 legal review procedures. The U.S. has several regulations that guide Article 36 LAWs reviews (Congressional Research Service 2019). DoD Instruction 5500.15 establishes broad guidelines to ensure that newly developed weapons conform to international law (Lawan 2006). However, these guidelines do not outline standardized legal review procedures specific to LAWs, which pose unique challenges concerning moral responsibility and compliance with international law. Major Guetlein, from the U.S. Naval War College, explains that when “the trigger puller is a machine”, the ethical accountability for an action’s outcome is much more difficult to determine than in the case of conventional weapons since LAWs can “engage targets autonomously without a man-in-the-loop” (Guetlein 2005, 12).

In order to address the lack of standardization in LAWs testing, this article suggests that two principles be added to DoD Instruction 5500.15. First, it should be stated that the battlefield commander bears sole responsibility for LAWs’ actions in combat. Because commanders are morally responsible for the employment of conventional weapons, they should also bear moral responsibility for the weapons in their unit that act autonomously. Second, the standard of meaningful human control, as framed by U.S. Air Force Lieutenant Colonel Adam Cook, should be integrated as a common metric to gauge the compatibility of LAWs with international military law.

II. Analysis

This paper focuses on the technical critique of LAWs, that their testing under international military law lacks any coherent form of standardization among the numerous countries who have signed Article 36. The ultimate argument of this paper is that, by implementing the proposed policy recommendations, the U.S. can serve as an example to the international community on how to standardize LAWs legal testing. Table 1 lists the countries that conduct Article 36 legal reviews. The U.S. conducts the most detailed LAWs tests because it is the only country with review methodologies for each branch of its military. Each country listed within Table 1 has an overarching document that requires its military to conduct some form of new weapons testing to ensure that newly developed weapons avoid “superfluous injury or suffering” (Lawan 2006, 13).

While DoD Instruction 5500.15 calls for “a legal review…conducted of all weapons…to ensure that their intended use…is consistent with…the laws of war”, Regulation 27-53, Instruction 5000.2C, and Instruction 51-402 guide LAWs testing more specifically in the Departments of the Army, Navy, and Airforce, respectively (Department of Defense 1974, 1). The specificity of U.S. LAWs testing affords a strong position to provide international leadership regarding LAWs legal reviews. As a prominent member of the international community, the U.S. has not only the ability, but also an obligation to model LAWs legal review standardization.

While DoD Instruction 5500.15 calls for “a legal review…conducted of all weapons…to ensure that their intended use…is consistent with…the laws of war”, Regulation 27-53, Instruction 5000.2C, and Instruction 51-402 guide LAWs testing more specifically in the Departments of the Army, Navy, and Airforce, respectively (Department of Defense 1974, 1). The specificity of U.S. LAWs testing affords a strong position to provide international leadership regarding LAWs legal reviews. As a prominent member of the international community, the U.S. has not only the ability, but also an obligation to model LAWs legal review standardization.

i. Ethical challenges of LAWs

Lethal autonomy presents several unique ethical, social, economic, and technical challenges that are largely unaddressed in current DoD regulations. Firstly, current DoD policy fails to identify the person who should bear moral responsibility for a LAWs’ actions on the battlefield. The moral responsibility for using a conventional weapon generally falls on the commander of the soldier who employs it. For example, if an air defense battery were to mistake an airliner for an enemy aircraft and shoot it down, the battery commander would be morally responsible for the ethical ramifications of his unit’s actions. However, who bears responsibility if an autonomous drone or tank kills an innocent civilian—the engineer, the commander, or the weapon itself? This question is unaddressed in current DoD policy and affects Article 36 tests because determining whether or not an autonomous weapon meets international legal standards requires a clear understanding of who bears ethical responsibility for the technology during operation. Clarity on LAWs moral responsibility provides a key first step to standardizing Article 36 testing methodology.

ii. Social challenges of LAWs

In addition to the moral challenges introduced by LAWs, many scholars believe that lethal autonomy also poses social challenges that threaten human safety. For example, Dr. Heather Roff—a warfare ethics scholar at the University of Denver—argues that LAWs should be banned because they take strategic decision-making away from humans and give it to machines that cannot make moral decisions on the battlefield (2014). While this paper does not focus on the social aspects of advanced LAWs, devising a legal solution to fix the current lack of LAWs testing standardization requires an answer to the counterargument that LAWs will never possess the ability to make moral decisions the battlefield.

Establishing the legitimacy of Article 36 tests requires an understanding that the ability to make moral decisions on the battlefield relies on two facets: discrimination between targets and weighing military gains against collateral damage. Addressing both facets, Professor Michael Schmitt at the University of Exeter Law School argues that LAWs AI algorithms, given enough technological development, may eventually match or outperform humans in discriminating between targets and weighing military gains versus costs (Schmitt 2013). As opposed to being banned, this article asserts that autonomous weapons should continue to develop under a standardized, well-defined set of legal principles under Article 36 of Additional Protocol 1.

Establishing the legitimacy of Article 36 tests requires an understanding that the ability to make moral decisions on the battlefield relies on two facets: discrimination between targets and weighing military gains against collateral damage. Addressing both facets, Professor Michael Schmitt at the University of Exeter Law School argues that LAWs AI algorithms, given enough technological development, may eventually match or outperform humans in discriminating between targets and weighing military gains versus costs (Schmitt 2013). As opposed to being banned, this article asserts that autonomous weapons should continue to develop under a standardized, well-defined set of legal principles under Article 36 of Additional Protocol 1.

iii. Economic challenges of LAWs

Another challenge posed by LAWs testing is the high costs of development and testing. For reference, about one-third of the total $9.39 billion budget for U.S. drone technology in 2018 was spent on testing and evaluation (Stohl 2018). The significant portion of the defense budget that would need to be spent on LAWs testing makes it an “obstacle to...evaluation”, especially with the increasing complexity of LAWs technology (Boulanin 2015, 16). Article 36 itself does not address the economic aspect of LAWs testing. The high costs associated with such testing should be taken into consideration for future Article 36 tests.

iv. Lack of standardization in testing LAWs

The most pressing technical issue seen with Article 36 is the lack of international provisions to establish a standardized methodology for conducting such tests. Specifically, there is no clear standard that outlines how LAWs should be tested. Thus, formulating a standardized LAWs testing procedure requires a foundational principle that defines the basic characteristics that LAWs must satisfy to conform to international law. A promising principle that could fill such a role is the idea of meaningful human control. In his work, Giving Meaning to the “Meaningful Human Control” Standard for Lethal Autonomous Weapon Systems, Lieutenant Colonel Adam Cook defines “meaningful human control” using three specific criteria: being “readily understandable to human operators”, providing “traceable feedback on the system status”, and providing “clear procedures for trained operators to activate and deactivate system functions” (2019, 17). Consequently, a standardized methodology for performing Article 36 tests requires a common evaluation metric which can be achieved by integrating the principle of meaningful human control into current testing procedures.

III. Proposed course of action

The nature of the problems that LAWs testing presents lends itself to many potential solutions, all of which would realistically take several years to complete.

i. Economic solution

The first potential solution would focus on the economic aspects of Article 36 testing and provide military contractors the necessary funding to sustain continued LAWs evaluation as the technology becomes more expensive to evaluate. To successfully pursue this course of action, financial resources in the order of $4 billion would be required (Stohl 2018). Although this would solve the domestic economic aspects of the LAWs testing problem, it would not extend internationally to many other countries outside the U.S. due to the financial resources necessary to sustain testing efforts. Furthermore, increased LAWs funding by the U.S. could be viewed by competitors such as Russia and China as an attempt to establish international LAWs superiority, which could lead to a LAWs arms race.

ii. Ethical solution

A second potential course of action would focus on the ethical problem of LAWs testing by modifying existing methodologies in DoD Instruction 5500.15. This would allow the U.S. to set an international example of how to conduct LAWs Article 36 reviews, with no financial burden. A more detailed explanation regarding this course of action is given in the next section.

IV. Policy recommendations

To address the lack of standardization in current Article 36 LAWs tests, the DoD should embrace the latter of the two courses of suggested action by adding several provisions to its current version of Instruction 5500.15: identifying the battlefield commander as bearing sole moral responsibility for LAWs’ actions as well as integrating meaningful human control as a common metric to gauge LAWs’ compliance with international military law. Due to the strong influence the U.S. has in the UN, taking initial steps to standardize LAWs testing domestically will serve as an international example for other nations to follow.

i. First policy recommendation

The first policy recommendation is to add to DoD Instruction 5500.15 that the battlefield commander bears sole moral responsibility for a LAWs’ actions in battle. During each LAW’s test and battlefield operation, the commander should bear sole ethical and legal culpability for its actions, just as he bears full responsibility for the action of human-operated conventional weapons. By clearly defining the person responsible for a LAWs’ decisions, this section will provide the prerequisite moral understanding needed for Article 36 standardization. This proposal falls in line with current ethical reasoning relating to conventional weapons (Guetlein 2005, 17). Because commanders possess moral responsibility for every action that the weapons under their command take, it is only logical that this rationale extend to weapons that can operate autonomously.

ii. Second policy recommendation

The second policy recommendation would suggest for a section which details meaningful human control as the ultimate standard against which Article 36 tests should be conducted. The closer LAWs come to demonstrating the three principles of meaningful human control—understandable action, traceable feedback, and easily deactivated functioning—the more it satisfies international law. By defining a common goal to undergird all LAWs tests, integrating meaningful human control into Instruction 5500.15 provides a critical first step toward standardizing LAWs testing domestically and internationally. While some scholars may criticize this approach as imposing a sort of tunnel vision on how LAWs’ conformity to Article 36 is understood, the benefits of standardized LAWs testing procedures that keep the international LAWs community accountable throughout the development process outweigh these theoretical critiques (Roff 2014; Johnson and Axinn 2013).

Implementing standardized LAWs testing would require coordination with the Departments of the Army, Navy, and Air Force. To ensure that each branch of the military is able to balance the unique nature of its mission set with the need for a common standard, the DoD should appoint a committee to oversee the suggested modification to Instruction 5500.15. In addition, the DoD should coordinate with each department to ensure that the modifications of their Article 36 provisions nest with the overall DoD LAWs testing goal of meaningful human control.

Implementing standardized LAWs testing would require coordination with the Departments of the Army, Navy, and Air Force. To ensure that each branch of the military is able to balance the unique nature of its mission set with the need for a common standard, the DoD should appoint a committee to oversee the suggested modification to Instruction 5500.15. In addition, the DoD should coordinate with each department to ensure that the modifications of their Article 36 provisions nest with the overall DoD LAWs testing goal of meaningful human control.

V. Summary

This paper began by tracing a brief history of LAWs development and regulation. Although Articles 35 and 36 call for national legal reviews of developing LAWs, they give no guidance on how such tests are to be conducted. By focusing on the unique moral challenges posed by LAWs, this paper proposes that the DoD integrates two additional sections into Instruction 5500.15. The first section will give the commander complete moral responsibility for LAWs operations in order to establish a uniform and clear structure of moral culpability that is prerequisite for standardizing LAWs testing. The second section would integrate the principle of meaningful human control in order to ensure that LAWs legal tests are conducted with the same overarching principle in mind. This common principle serves as the first step in standardizing Article 36 legal reviews in the international LAWs community.

References

- Backstrom, Alan and Ian Henderson. 2012. “New Capabilities in Warfare: An Overview of Contemporary Technological Developments and the Associated Legal and Engineering Issues in Article 36 Weapons Reviews.” International Review of the Red Cross 94:866. https://doi.org/10.1017/S1816383112000707

- Boulanin, Vincent. 2015. Implementing Article 36 Weapons Reviews in the Light of Increasing Autonomy in Weapon Systems. SIPRI Insights on Peace and Security, 1-28. https://www.sipri.org/sites/default/files/files/insight/SIPRIInsight1501.pdf

- Boulanin, Vincent and Maaike Verbruggen. 2017. Mapping the Development of Autonomy in Weapon Systems. SIPRI Insights on Peace and Security. https://www.sipri.org/sites/default/files/2017-11/siprireport_mapping_the_development_ of_autonomy_in_weapon_systems_1117_1.pdf

- Congressional Research Service. 2019. Defense Primer: U.S. Policy on Lethal Autonomous Weapon Systems. Congressional Research Service. https://crsreports.congress.gov/product/pdf/IF/IF11105

- Cook, Adam. 2019. Taming Killer Robots- Giving Meaning to the “Meaningful Human Control” Standard for Lethal Autonomous Weapon Systems. Judge Advocate General School. https://www.airuniversity.af.edu/AUPress/Display/Article/1879811/taming-killer-robots-giving-meaning-to-the-meaningful-human-control-standard-fo/

- Department of the Air Force. 2018. AFI51-402. Department of the Air Force.

http://static.e-publishing.af.mil/production/ 1/af_ja/publication/afi51-402/afi51-402.pdf - Department of the Army. 1979. Review of Legality of Weapons Under International Law. AR27–53. Department of the Army. https://fas.org/irp/doddir/army/ar27-53.pdf .

- Department of Defense. 1974. Review of Legality of Weapons Under International Law. 5500.15. Department of Defense Instruction.

https://ihl-databases.icrc.org/applic/ihl/ihl-nat.nsf/implementingLaws.xsp?documentId=C5755056B44D518CC12576EB004A29E5&action=openDocument&xp_countrySelected=US&xp_topicSelected=GVAL-992BUL&from=state& SessionID=DKTT2ARUAN - Department of the Navy. 2008. Acquisition and Capabilities Guidebook. SECNAV Instruction 5000.2C. Department of the Navy. https://www.doncio.navy.mil/ContentView.aspx?id=344

- Guetlein, Michael A. 2005. Lethal Autonomous Weapons– Ethical and Doctrinal Implications. Joint Military Operations Department Naval War College. https://apps.dtic.mil/dtic/tr/fulltext/u2/a464896.pdf

- Johnson, Aaron, and Sidney Axinn. 2013. “The Morality of Autonomous Robots.” Journal of Military Ethics 12(2): 129-141. https://doi.org/10.1080/15027570.2013.818399

- Lawan, Kathleen. 2006. A Guide to the Legal Review of New Weapons, Means, and Methods of Warfare. International Review of the Red Cross. https://www.icrc.org/en/publication/0902-guide-legal-review-new-weapons-means-and-methods-warfare-measures-implement-article

- Roff, Heather M. 2014. “The Strategic Robot Problem: Lethal Autonomous Weapons in War.” Journal of Military Ethics 13(3): 211-227., https://doi.org/10.1080/15027570.2014.975010

- Schmitt, Michael N. 2013. “Autonomous Weapon Systems and International Humanitarian Law: A Reply to the Critics.” Harvard National Security Journal Features, 1-37. https://harvardnsj.org/2013/02/autonomous-weapon-systems-and-international-humanitarian-law-a-reply-to-the-critics/

- Stohl, Rachel. 2018. An Action Plan on U.S. Drone Policy Recommendations for the Trump Administration. Stimson Center. https://www.stimson.org/sites/default/files/file-attachments/Stimson%20Action%20Plan% 20on%20US%20Drone%20Policy.pdf

- United Nations Convention on Certain Conventional Weapons (CCW). 2014. Report of the 2014 Informal Meeting of Experts on Lethal Autonomous Weapons Systems (LAWS). United Nations Convention on Certain Conventional Weapons. https://unog.ch/80256EE600585943/(httpPages)/8FA3C2562A60FF81C1257CE600393DF6?OpenDocument

Jared M. Cochrane is a West Point physics major whose research has focused on quantum field theory and applied ethics. His research project, Energy Conditions for a Quantized Scalar Field in the Presence of a Mamaev-Trunov Potential, uses relativistic quantum field theory to disprove the claims of a physicist who alleged to have defied the quantum inequality in 2011. Jared has done research applying quantum theory at MIT to help construct optical systems that facilitate long-range free space test beds for quantum networking via induced photon entanglement. Jared has also done research in the realm of applied ethics, having presented a paper, Jus in Bello and the Just Cause of War, at the 2019 Conference on the Ethics of War and Peace.

Author Disclaimer: The views expressed herein are those of the author and do not reflect the position of the United States Military Academy, the Department of the Army, or the Department of Defense.

DISCLAIMER: The findings and conclusions published herein are solely attributed to the author and not necessarily endorsed or adopted by the Journal of Science Policy and Governance. Articles are distributed in compliance with copyright and trademark agreements.

ISSN 2372-2193

ISSN 2372-2193